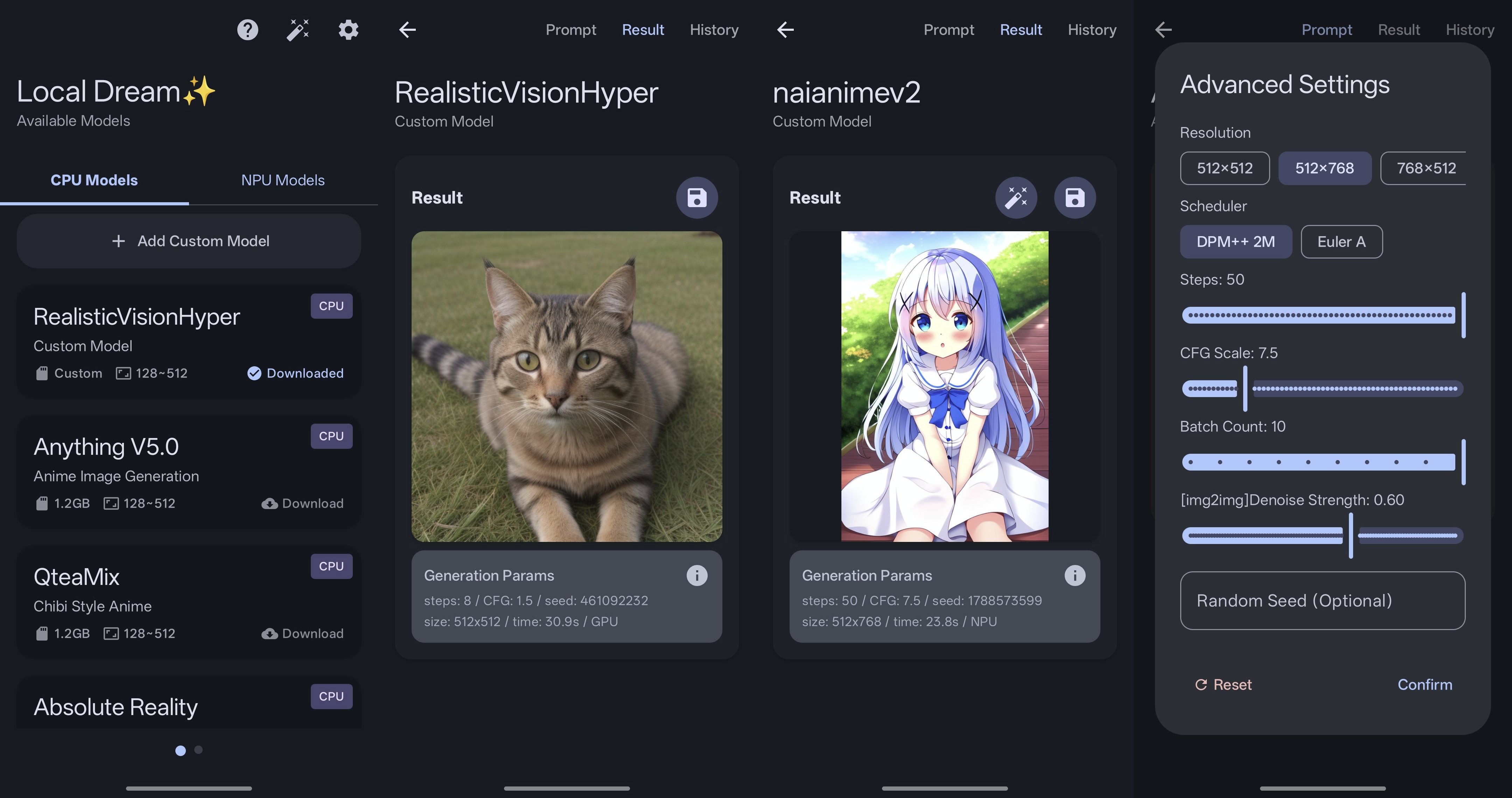

local-dream

Android Stable Diffusion with Snapdragon NPU acceleration

Also supports CPU/GPU inference

About this Repo

This project is now open sourced and completely free. Hope you enjoy it!

If you like it, please consider sponsor this project.

[!NOTE] Currently focus on SD1.5 models. SD2.1 models are no longer maintained due to poor quality and not popular. SDXL/Flux models are too large for most devices. So will not support them for now.

Most users don't get how to properly use highres mode. Please check here.

Now you can import your own NPU models converted using our easy-to-follow NPU Model Conversion Guide. And you can also download some pre-converted models from xororz/sd-qnn or Mr-J-369. Download

_minif you are using non-flagship chips. Download_8gen1if you are using 8gen1. Download_8gen2if you are using 8gen2/3/4/5. We recommend checking the instructions on the original model page to set up prompts and parameters.You can join our telegram group for discussion or help with testing.

🚀 Quick Start

- Download: Get the APK from Releases or Google Play(NSFW filtered)

- Install: Install the APK on your Android device

- Select Models: Open the app and download the model(s) you want to use

✨ Features

- 🎨 txt2img - Generate images from text descriptions

- 🖼️ img2img - Transform existing images

- 🎭 inpaint - Redraw selected areas of images

- custom models - Import your own SD1.5 models for CPU (in app) or NPU (follow conversion guide). You can get some pre-converted models from xororz/sd-qnn or Mr-J-369

- lora support - Support adding LoRA weights to custom CPU models when importing.

- prompt weights - Emphasize certain words in prompts. E.g.,

(masterpiece:1.5). Same format as Automatic1111 - embeddings - Support for custom embeddings like EasyNegative. SafeTensor format is required. Convert

pttosafetensorsusing this - upscalers - 4x upscaling with realesrgan_x4plus_anime_6b and 4x-UltraSharpV2_Lite

🔧 Build Instructions

Note: Building on Linux/WSL is recommended. Other platforms are not verified.

Prerequisites

The following tools are required for building:

- Rust - Install rustup, then run:

# rustup default stable rustup default 1.84.0 # Please use 1.84.0 for compatibility. Newer versions may cause build failures. rustup target add aarch64-linux-android - Ninja - Build system

- CMake - Build configuration

1. Clone Repository

git clone --recursive https://github.com/xororz/local-dream.git

2. Prepare SDKs

- Download QNN SDK: Get QNN_SDK_2.39 and extract

- Download Android NDK: Get Android NDK and extract

- Configure paths:

- Update

QNN_SDK_ROOTinapp/src/main/cpp/CMakeLists.txt - Update

ANDROID_NDK_ROOTinapp/src/main/cpp/CMakePresets.json

- Update

3. Build Libraries

Linux

cd app/src/main/cpp/

bash ./build.sh

Windows

# Install dependencies if needed:

# winget install Kitware.CMake

# winget install Ninja-build.Ninja

# winget install Rustlang.Rustup

cd app\src\main\cpp\

# Convert patch file (install dos2unix if needed: winget install -e --id waterlan.dos2unix)

dos2unix SampleApp.patch

.\build.bat

macOS

# Install dependencies with Homebrew:

# brew install cmake rust ninja

# Fix CMake version compatibility

sed -i '' '2s/$/ -DCMAKE_POLICY_VERSION_MINIMUM=3.5/' build.sh

bash ./build.sh

4. Build APK

Open this project in Android Studio and navigate to: Build → Generate App Bundles or APKs → Generate APKs

Technical Implementation

NPU Acceleration

- SDK: Qualcomm QNN SDK leveraging Hexagon NPU

- Quantization: W8A16 static quantization for optimal performance

- Resolution: Fixed 512×512 model shape

- Performance: Extremely fast inference speed

CPU/GPU Inference

- Framework: Powered by MNN framework

- Quantization: W8 dynamic quantization

- Resolution: Flexible sizes (128×128, 256×256, 384×384, 512×512)

- Performance: Moderate speed with high compatibility

NPU High Resolution Support

[!IMPORTANT] Please note that quantized high-resolution(>768x768) models may produce images with poor layout. We recommend first generating at 512 resolution (optionally you can upscale it), then using the high-resolution model for img2img (which is essentially Highres.fix). The suggested img2img denoise_strength is around 0.8. After that, you can get images with better layout and details.

Device Compatibility

NPU Acceleration Support

Compatible with devices featuring:

- Snapdragon 8 Gen 1/8+ Gen 1

- Snapdragon 8 Gen 2

- Snapdragon 8 Gen 3

- Snapdragon 8 Elite

- Snapdragon 8 Elite Gen 5/8 Gen 5

- Non-flagship chips with Hexagon V68 or above (e.g., Snapdragon 7 Gen 1, 8s Gen 3)

Note: Other devices cannot download NPU models

CPU/GPU Support

- RAM Requirement: ~2GB available memory

- Compatibility: Most Android devices from recent years

Available Models

The following models are built-in and can be downloaded directly in the app:

| Model | Type | CPU/GPU | NPU | Clip Skip | Source |

|---|---|---|---|---|---|

| AnythingV5 | SD1.5 | ✅ | ✅ | 2 | CivitAI |

| ChilloutMix | SD1.5 | ✅ | ✅ | 1 | CivitAI |

| Absolute Reality | SD1.5 | ✅ | ✅ | 2 | CivitAI |

| QteaMix | SD1.5 | ✅ | ✅ | 2 | CivitAI |

| CuteYukiMix | SD1.5 | ✅ | ✅ | 2 | CivitAI |

🎲 Seed Settings

Custom seed support for reproducible image generation:

- CPU Mode: Seeds guarantee identical results across different devices with same parameters

- GPU Mode: Results may differ from CPU mode and can vary between different devices

- NPU Mode: Seeds ensure consistent results only on devices with identical chipsets

Credits & Acknowledgments

C++ Libraries

- Qualcomm QNN SDK - NPU model execution

- alibaba/MNN - CPU model execution

- xtensor-stack - Tensor operations & scheduling

- mlc-ai/tokenizers-cpp - Text tokenization

- yhirose/cpp-httplib - HTTP server

- nothings/stb - Image processing

- facebook/zstd - Model compression

- nlohmann/json - JSON processing

Android Libraries

- square/okhttp - HTTP client

- coil-kt/coil - Image loading & processing

- MoyuruAizawa/Cropify - Image cropping

- AOSP, Material Design, Jetpack Compose - UI framework

Models

- CompVis/stable-diffusion and all other model creators

- xinntao/Real-ESRGAN - Image upscaling

- Kim2091/UltraSharpV2 - Image upscaling

- bhky/opennsfw2 - NSFW content filtering

💖 Support This Project

If you find Local Dream useful, please consider supporting its development:

What Your Support Helps With:

- Additional Models - More AI model integrations

- New Features - Enhanced functionality and capabilities

- Bug Fixes - Continuous improvement and maintenance

Your sponsorship helps maintain and improve Local Dream for everyone!